End-to-End Video Compressive Sensing Using Anderson-Accelerated Unrolled Networks

Yuqi Li, Qi Miao, Rahul Gulve, Mian Wei, Roman Genov, Kyros Kutulakos, Wolfgang Heidrich,ICCP, 2020

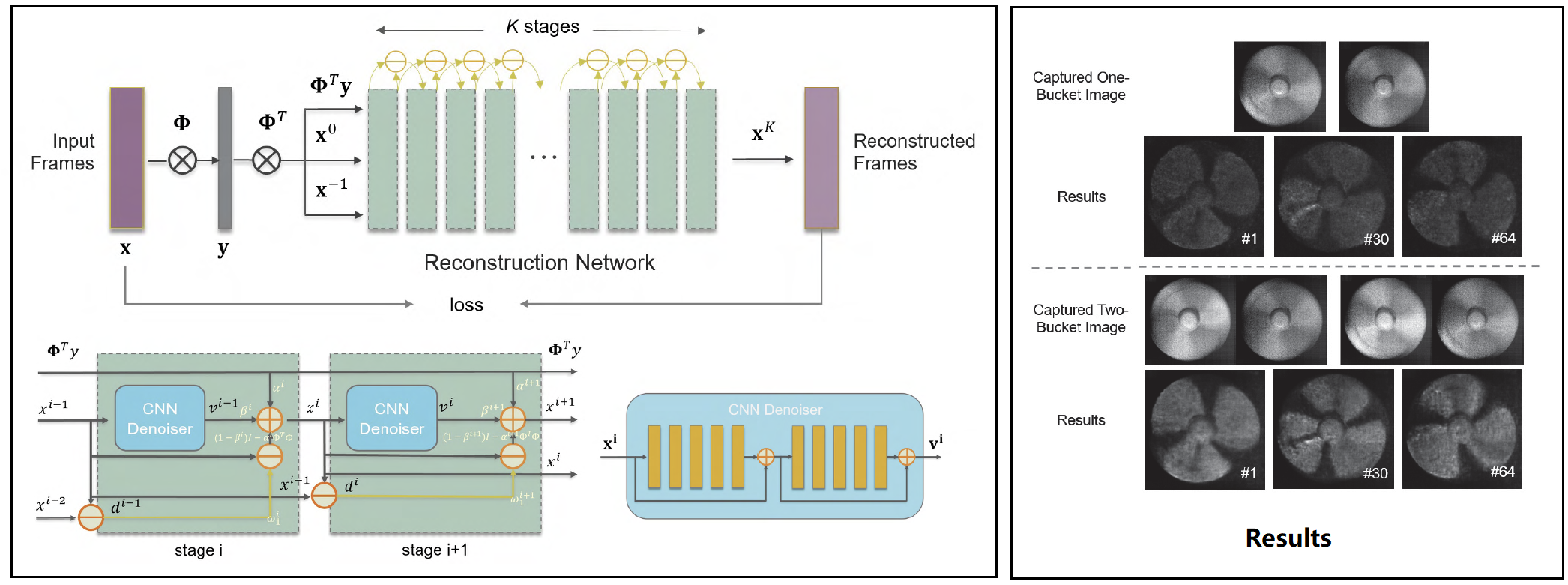

Figure 1: Our deep network architecture and reconstructed results.

Abstract

Compressive imaging systems with spatial-temporal encoding can be used to capture and reconstruct fast-moving objects. The imaging quality highly depends on the choice of encoding masks and reconstruction methods. In this paper, we present a new network architecture to jointly design the encoding masks and the reconstruction method for compressive high-frame-rate imaging. Unlike previous works, the proposed method takes full advantage of denoising prior to provide a promising frame reconstruction. The network is also flexible enough to optimize full-resolution masks and efficient at reconstructing frames. To this end, we develop a new dense network architecture that embeds Anderson acceleration, known from numerical optimization, directly into the neural network architecture. Our experiments show the optimized masks and the dense accelerated network respectively achieve 1.5 dB and 1 dB improvements in PSNR without adding training parameters. The proposed method outperforms other state-of-the-art methods both in simulations and on real hardware. In addition, we set up a coded two-bucket camera for compressive high-frame-rate imaging, which is robust to imaging noise and provides promising results when recovering nearly 1,000 frames per second.Resources

Paper_fullres: [Yuqi2020HighFrameRate.pdf]

Code: [Code]

Citation

@article{Yuqi2020HighFrameRate,

title={End-to-End Video Compressive Sensing Using Anderson-Accelerated Unrolled Networks},

author={Li, Yuqi and Qi, Miao and Gulve, Rahul and Wei, Mian and Genov, Roman and Kutulakos, Kiriakos and Heidrich, Wolfgang},

booktitle={2020 IEEE International Conference on Computational Photography (ICCP)},

pages={1--8},

year={2020},

organization={IEEE}

}